Collaboration Optimization - Configuration

Overview

The collaboration optimization library pack allows you to measure the actual usage and adoption of a chosen real-time collaboration tool, detect shadow collaboration, engage with users for further context, and identify resistance to change.

Pre-Requisites

The Collaboration Optimization pack utilizes Focus Time for score generation. This feature was introduced for Windows clients in Nexthink v6.29 and for macOS clients in v6.30.

Therefore, for Windows only clients this pack can be installed on Nexthink V6.29 (On Premise) or 2020.5 (Cloud). For Windows and macOS clients, Nexthink v6.30 (On Premise) or 2021.1 (Cloud) will be required.

The pack also utilizes the Digital Experience Score 2.x, so please download the latest version of DEX for your environment if it is not already installed.

This pack is built on the concept of Personas introduced in the Persona Insight pack, however this is a standalone pack and Persona Insight does not need to be installed for this pack to function.

Focus Time

The main score file in this pack uses a new feature, Focus Time, which once enabled, is a data point that is collected to show the usage of applications (i.e. time of the focused window being used).

The Focus Time Monitoring feature is available for Windows clients from Version 6.29 of Nexthink and for macOS clients from Version 6.30.

Please note that this feature is disabled by default in both operating systems. Should you wish to enable this feature please refer to this guide on the Nexthink documentation portal.

Customization

Pack structure

This pack is constructed in a similar fashion to the Persona Insights pack. Whilst this is a standalone pack, some familiarity with the concept of Persona Traits and Personas may prove useful when configuring this pack.

Categories

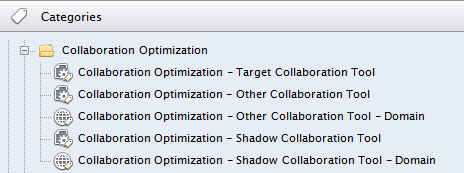

This pack contains 5 categories.

The categories are used to identify the real time collaboration tools monitored by this pack, they are all empty by default so will need to be modified for this pack to function correctly.

Please note: If you have macOS clients in your organization, you will have to specify the executable name for windows and mac OS as separate conditions.

The target collaboration tool category is the most important, as it allows you to specify the collaboration tool that the majority of this pack is concerned with. All metrics and dashboards are purposefully designed not to refer to any specific application name – meaning that this pack can be used to track the usage of any application, be it MS Teams, Zoom or Slack simply by specifying it at the category level, no other modification is necessary.

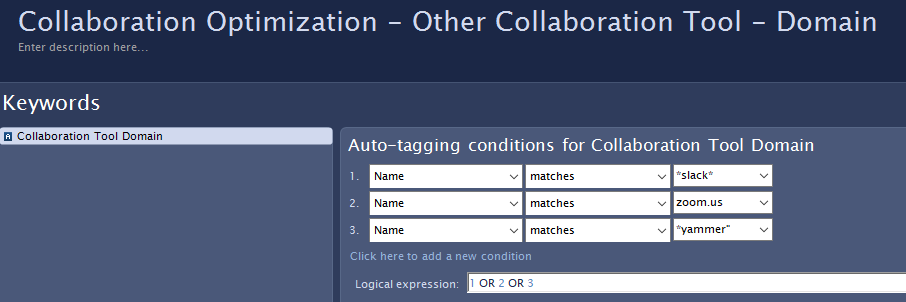

The next category in this pack allows you to specify the “other” collaboration tools in use in your organization or, if these applications are web based, you can specify the domain address in a separate category.

Similarly, you have two categories for “shadow” collaboration tools, or domains, whose usage you want to measure.

With shadow collaboration tools in particular, there is a possibility that employees are using applications that you are not aware of, and would not think to enter into the relevant category. You may find that an Investigation into non-engaged employees will show such applications, or they may even be listed in the responses to the non-engaged employee survey.

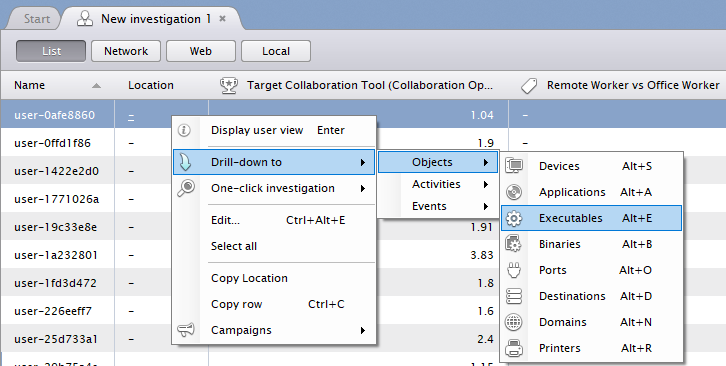

Shadow collaboration tool investigation

Using the Finder, run an investigation on the non-engaged employees metric - right-click on one employee and choose to drill-down to the executables in use. Repeat this for several employees to see if there is a pattern of usage for a collaboration program that you were not previously tracking with this pack. If one is found, add it to the shadow collaboration tool category.

If your organization has recently acquired or merged with another company then you may also find that those new employees are using non-approved applications whilst the IT standardization process is ongoing.

Scores

There are two scores included in this pack; Collaboration Optimization and Collaboration Optimization Summary.

The collaboration optimization score measures the usage of the applications specified in the categories mentioned above and scores this usage into ranges. The collaboration optimization summary score references this first score and applies a filter to the scored ranges to define the personas used in this pack. The output of the summary score is binary, 0 or 10.

The main advantage of this approach is that threshold changes to personas can be made easily by modifying one score file and this will take effect for all metrics and dashboards that reference it.

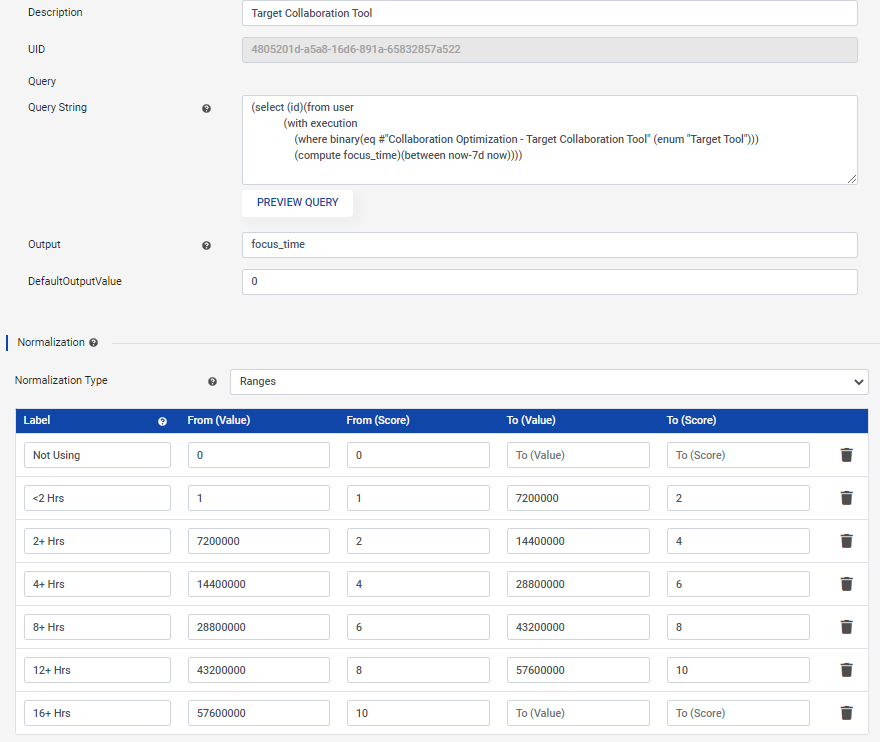

Score Modification

There are, in fact, two ways to alter the thresholds for how employees are identified in this pack. The first is to alter the range parameters. Below is a screenshot of the Target Collaboration Tool score. For each employee, the amount of time the target tool is used is calculated using Focus Time. This value is then scored according to the ranges below. In this case, you can see that to score from 8 to 10 the application would need to be in focus for 12-16 hours a week.

Two of the scores in this file look at web domains instead of installed applications. In those cases, cumulated_web_interaction_duration is measured instead of focus_time but the principle is the same.

The second (and easier) way to change the score threshold is to change a value in the NXQL statement in the summary score file.

To use champion employees as an example; as shown above, the first score file measures focus time and ranks the output with a score. The second, summary score file loads this score and applies a filter, in this case any employees who scores greater than 9.

Summary score - Champion Employee NXQL:

(select (id #"score:Collaboration Optimization/Target Collaboration Tool")

(from user

(with user_activity

(where user (gt #"score:Collaboration Optimization/Target Collaboration Tool" (real 9.0)))

(compute number_of_users)(between now-7d now))))

Here you can see the threshold is set to 9. You can change this to a lower value to increase the number of employees picked up by the score.

As you can appreciate, there may be a certain amount of fine-tuning required to get this pack to produce the results that you want. Changing the threshold in the summary score is a quick way to see changes, but the score ranges in the main score file may also need to be changed for more fine grained control.

Metrics

The metrics are separated into folders according to the dashboards that reference them. Due to the summary score system employed in this pack, in most cases the metrics do not need to be modified to adjust the thresholds of the information they provide.

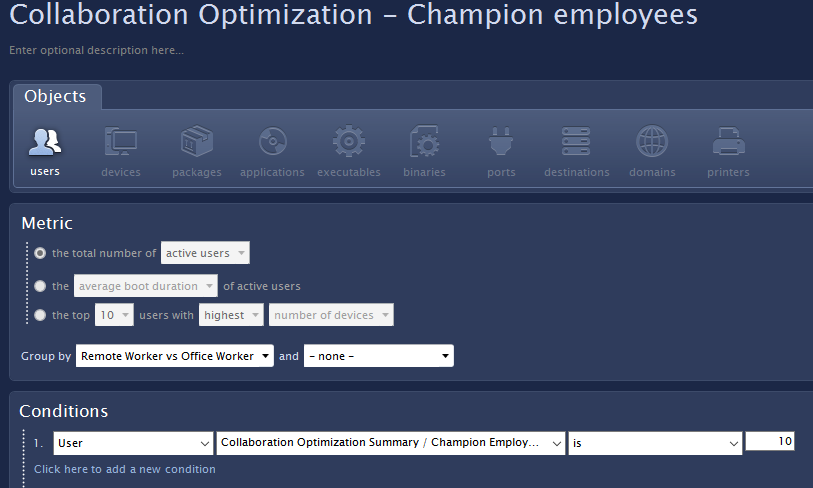

To continue our champion employee example, the summary score output is either 0 (not a champion employee) or 10 (champion employee). This can be seen in the metric screenshot below:

Campaign metrics

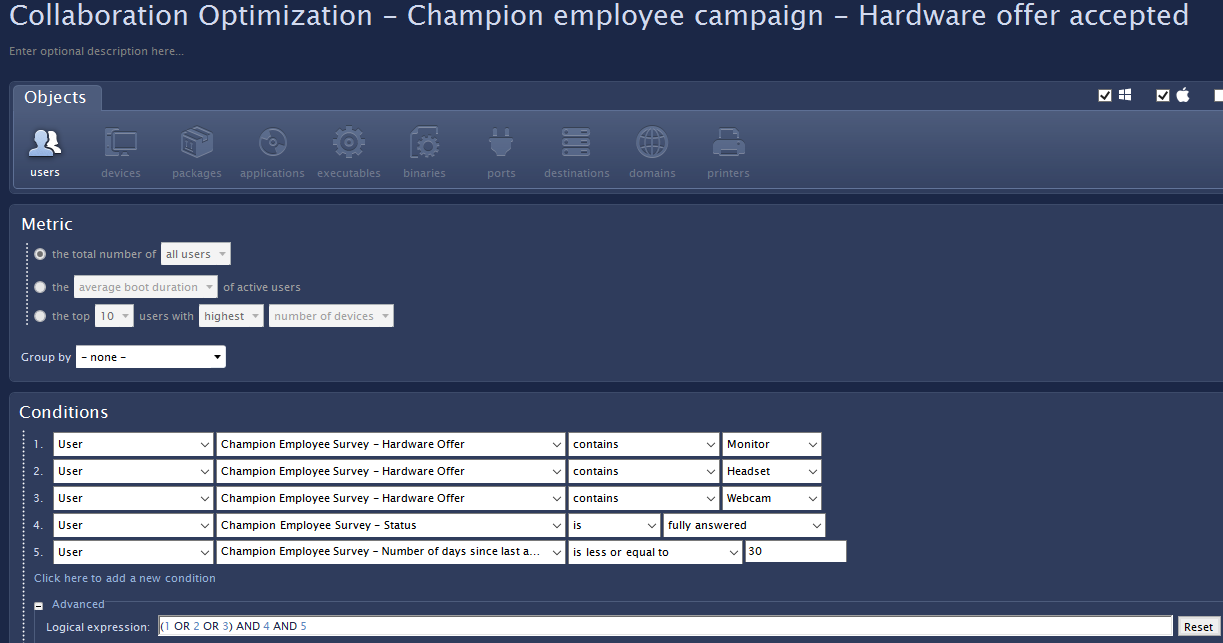

There are multiple metrics that take information from the two campaigns included with this pack. In all cases, a condition has been added to restrict the responses to these campaigns to the last 30 days:

”<Survey name> - Number of days since last action” .

This is particularly important for the champion employee campaign where additional hardware can be requested. Assuming that any requests will be fulfilled within 30 days the results from the metrics, and shown on the dashboard, should therefore be showing current requests from recent campaigns only.

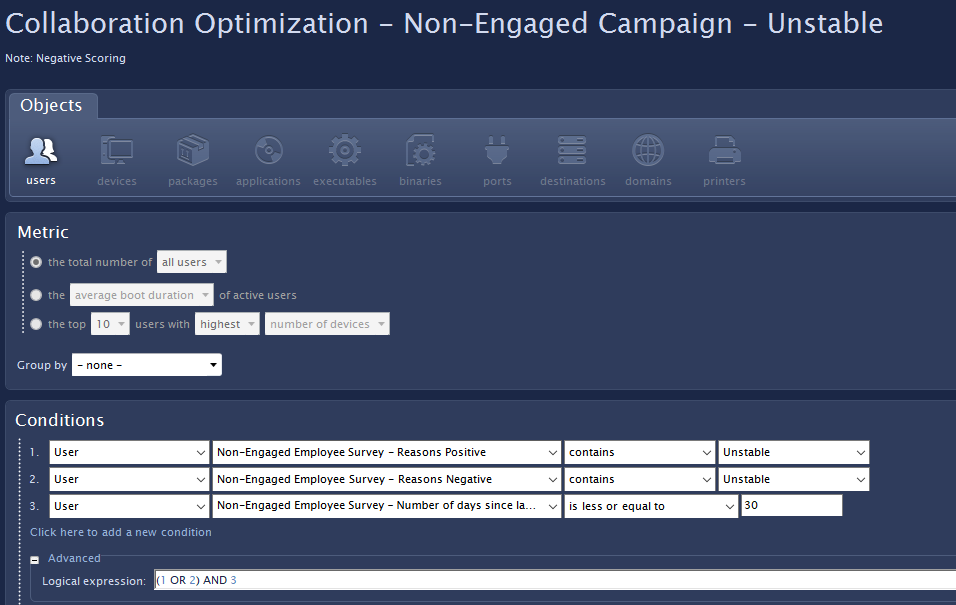

The non-engaged employee campaign has two questions with similar statements. Recipients will receive either one or the other depending on their response to the first question. As some statements are the same for both survey questions (e.g. “Application is unstable”) the responses to both questions have been combined into one metric and one dashboard:

This has been done for clarity of information in the dashboard and also to reduce the number of metrics included in the pack. Should you wish to have two dashboards showing the different responses to both questions, you should duplicate the respective metrics and modify them accordingly.

Dashboards

There are four dashboards included in this pack.

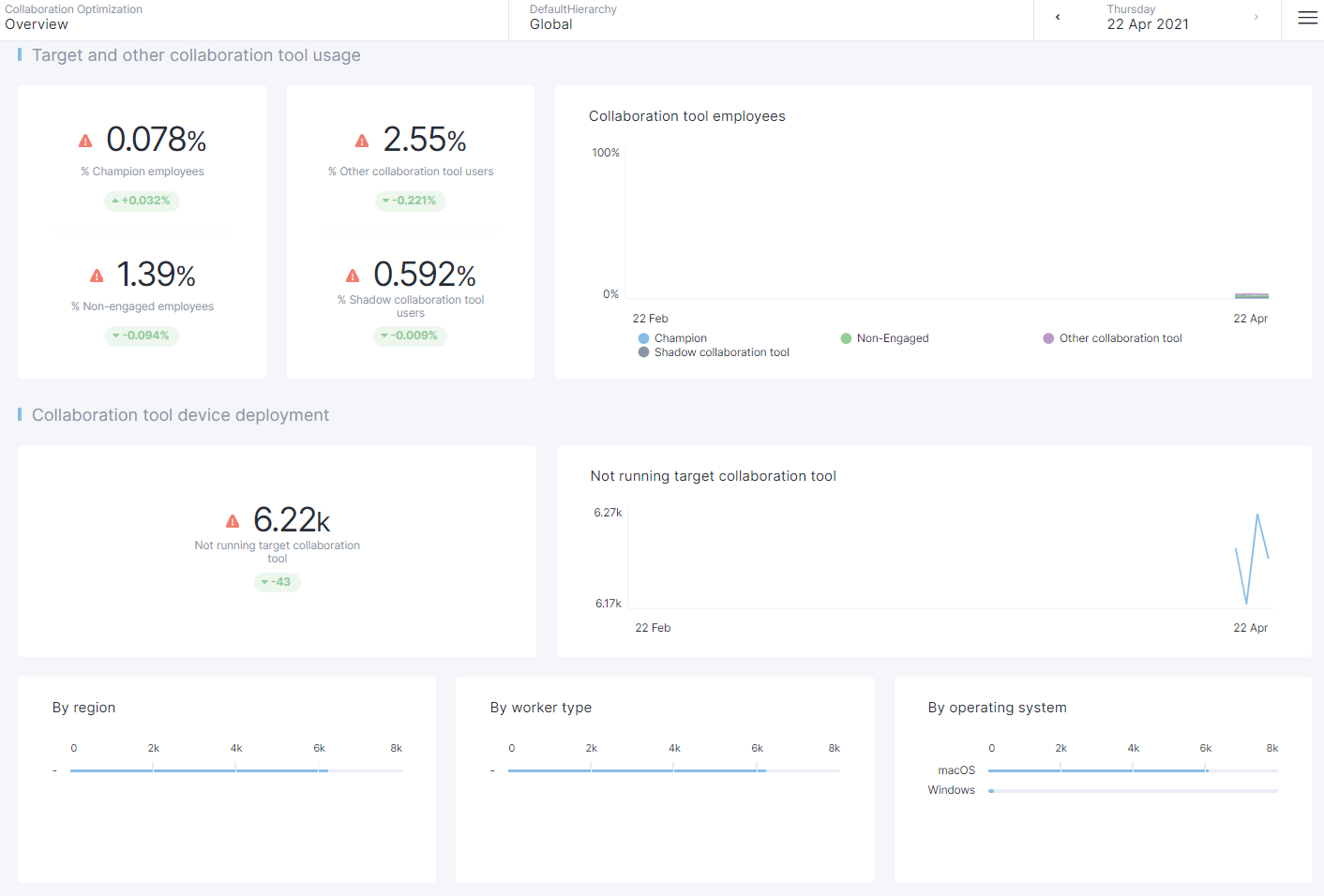

Overview

This Dashboard gives a summary of your landscape, showing your key employee activity in this area along with the technical deployment and stability of your collaboration tool landscape.

This is a summary dashboard, more detailed information can be found using the other dedicated dashboards in this pack.

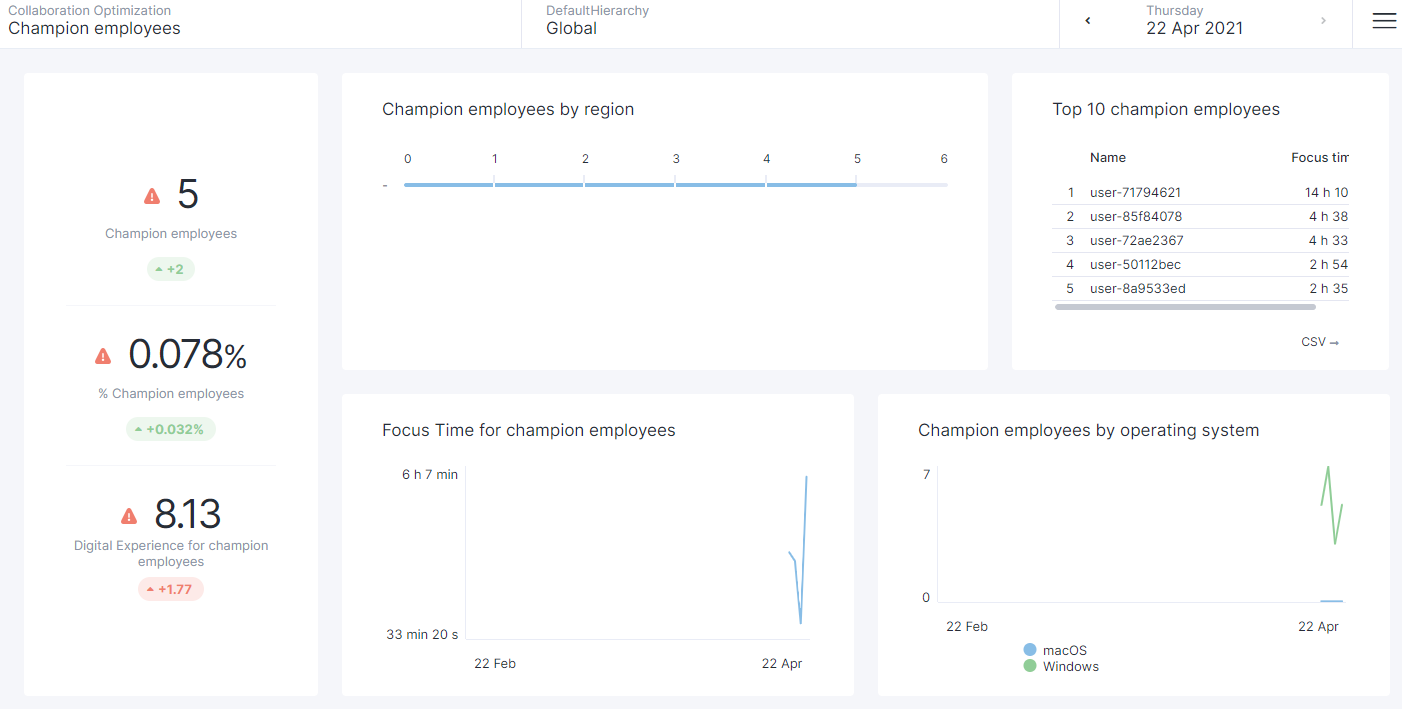

Champion employees

This dashboard shows more detail on those employee “champions” who are fully engaged with your target real time collaboration tool.

Champion employees can be of great use to you, as they can act as evangelists for the product, driving adoption amongst their colleagues.

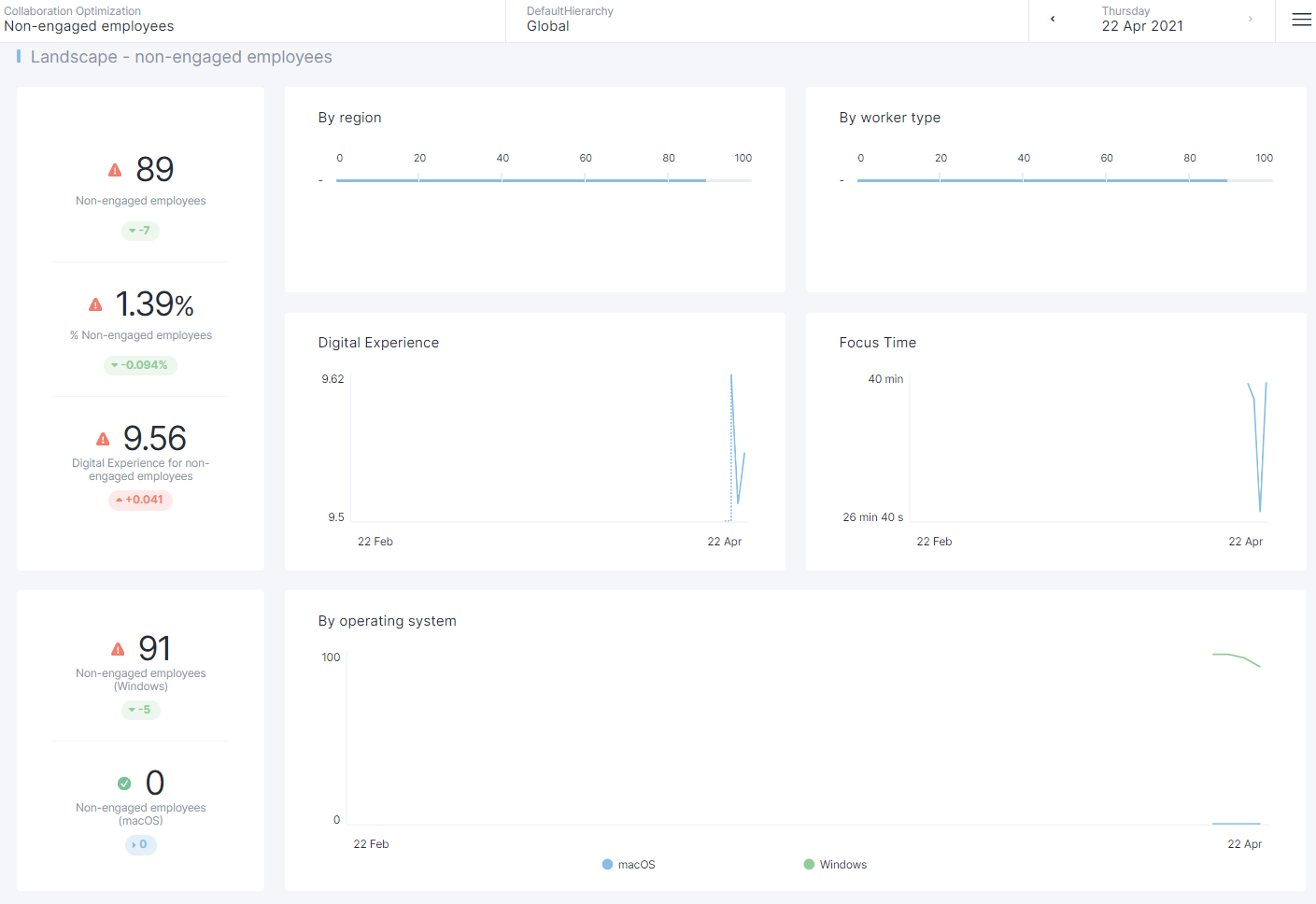

Non-engaged employees

This Dashboard provides more detail on the employees who are not engaged with your target real time collaboration tool.

Landscape

This section shows the number and ratio of non-engaged employees, and also the Digital Experience score of their devices.

The number of non-engaged employees is listed by region and worker type and their Digital Experience and Focus Time are tracked over time.

There is also a breakdown by operating system

The next sections provide more information on the possible reasons for non-engagement with the collaboration tool.

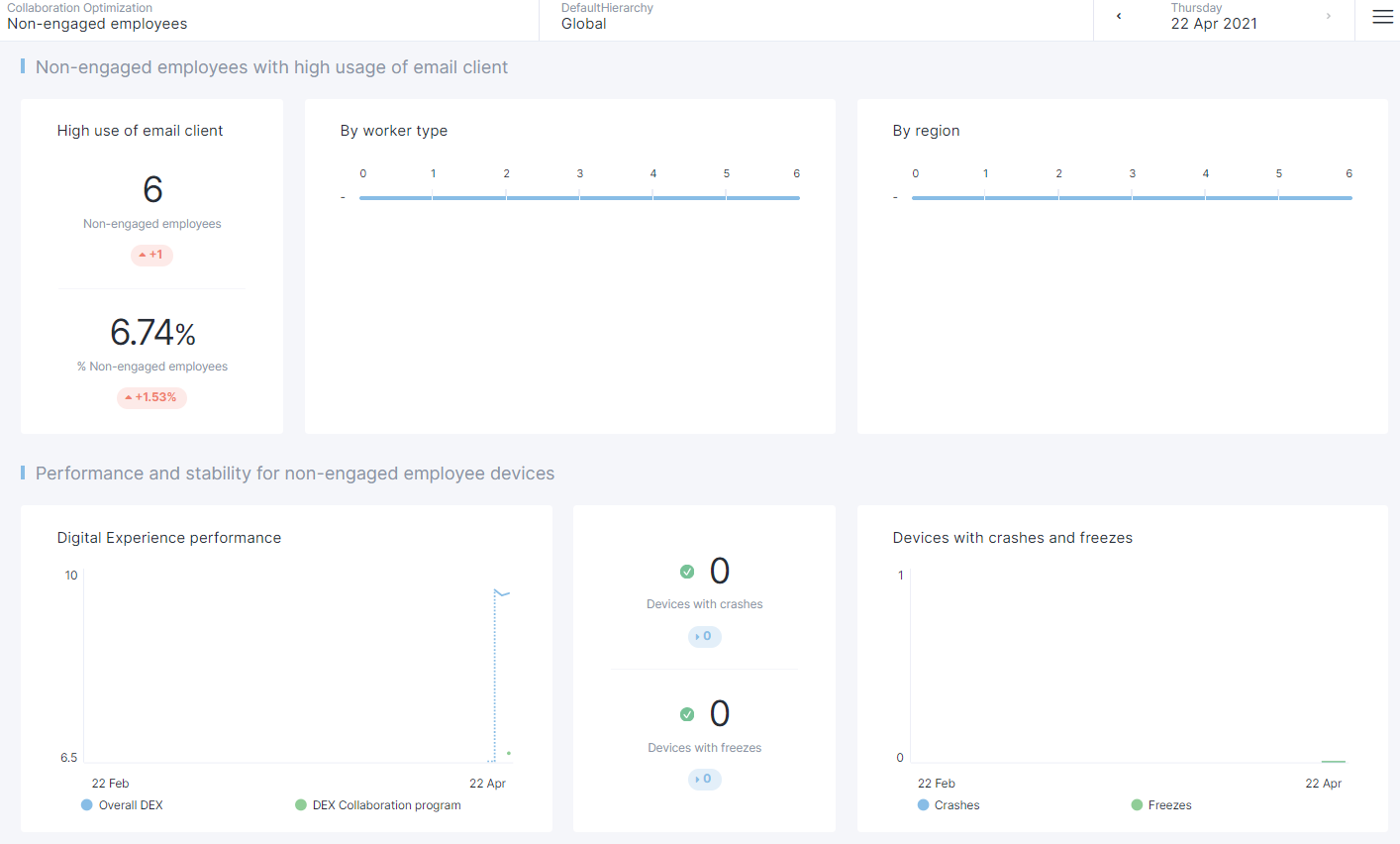

Non-engaged employees with high usage of email client

One possible reason for employee non-engagement with a collaboration tool could be an over-reliance on a (possibly more familiar) email client. Obviously some roles have a higher reliance on email use than others but this section will show you those non-engaged employees who have exhibited a strong preference for the use of an email client (using Focus Time).

As you try to move employees away from email and into more real time collaboration, use this dashboard to identify the employees to contact and think of using Engage Campaigns to advertise the benefits of real time collaboration.

Performance and Stability

Another reason for non-engagement could be due to a general poor performance of the device or specific application instability of the collaboration tool in question.

Taken together, this information might provide your support teams with some additional information on why the employees listed are not engaged with the target collaboration tool.

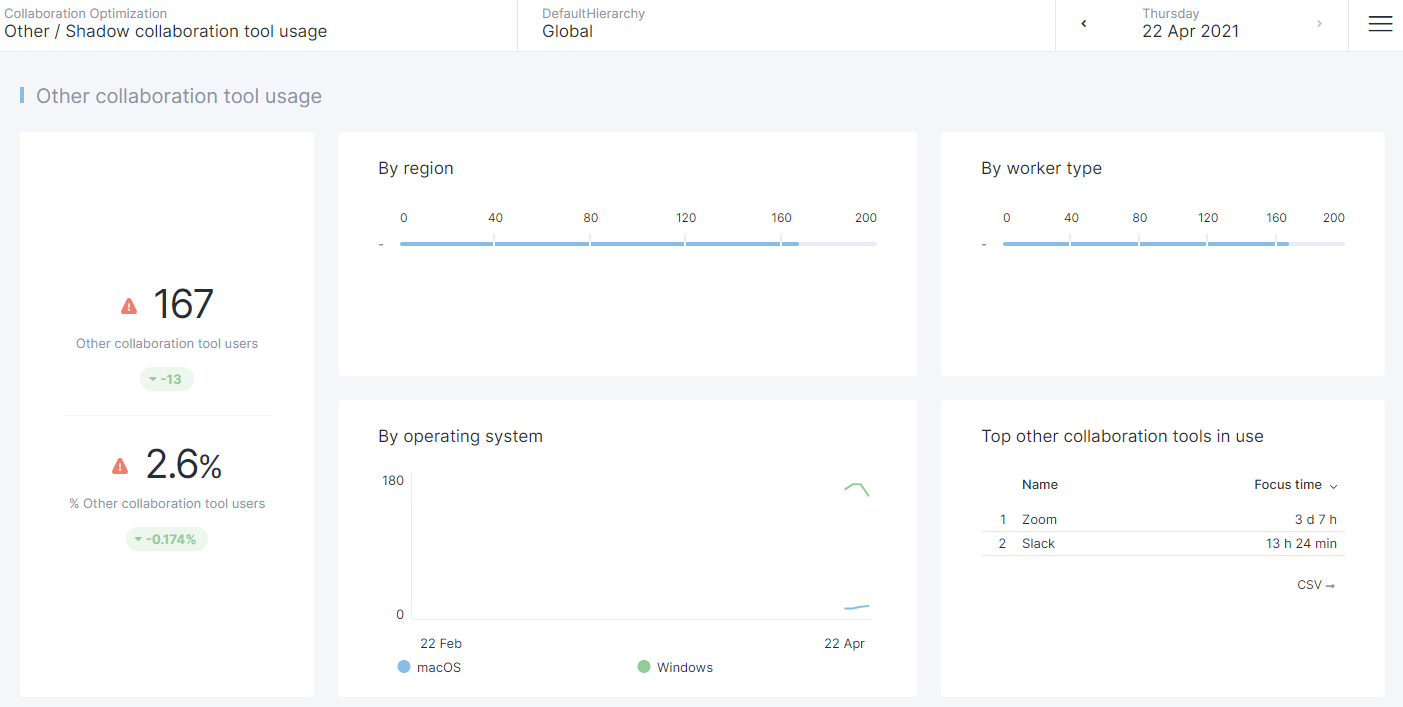

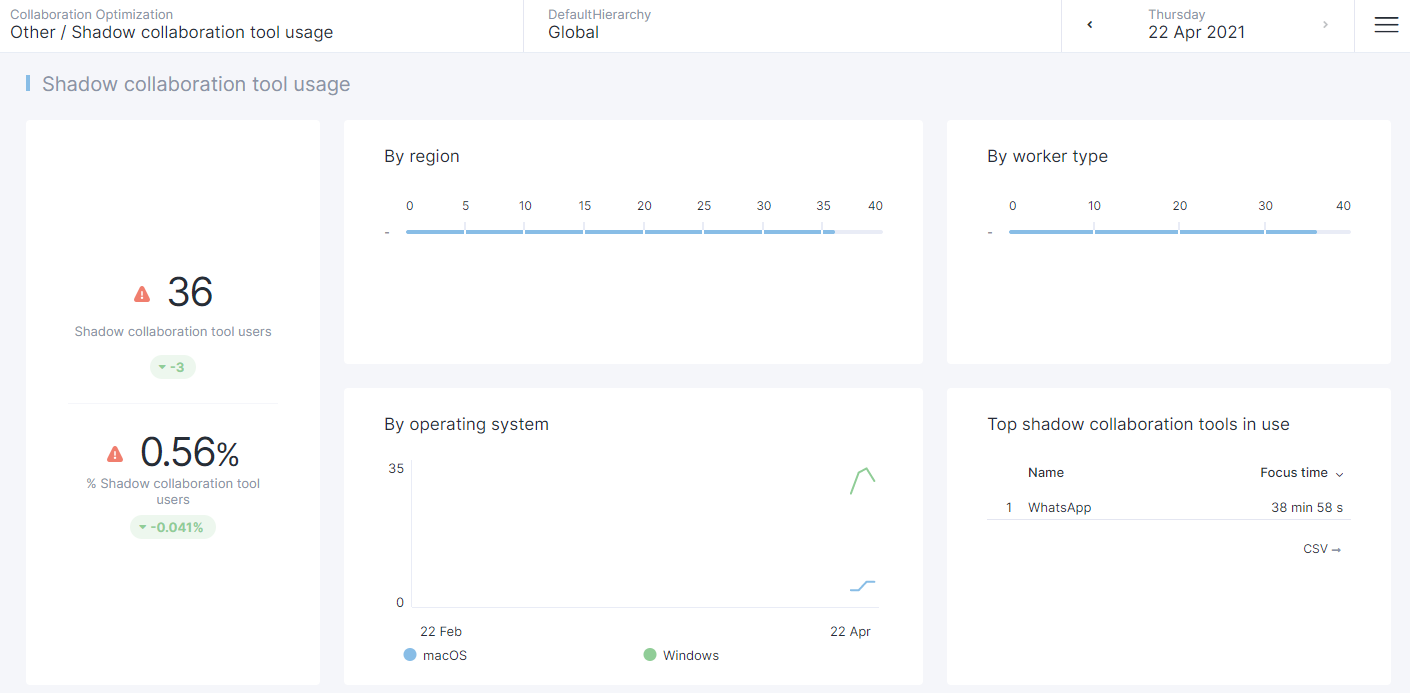

Other / Shadow collaboration tool users

This Dashboard provides more detail on those employees who, whilst they may use the target collaboration tool, have indicated a preference for a different collaboration tool.

This dashboard differentiates between two types of behaviour:

The use of an “other collaboration tool” - an application supported by the organization but not in general use.

The use of a “shadow” collaboration tool - an application that is not approved by the organization’s IT department.

Campaigns

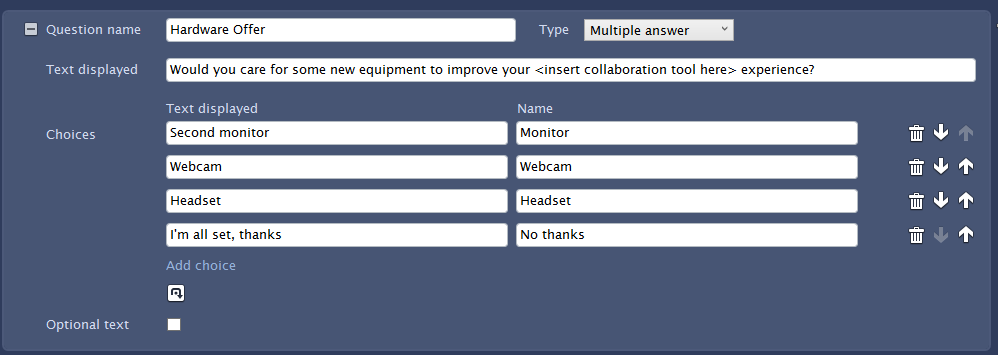

There are two campaigns included with this pack, targeted at two different employee personas. The first is designed to be sent to the “champion employees” to offer them some additional hardware that could improve their experience when using a collaboration program, such as a dedicated webcam, headset or a second monitor if they don’t already have one.

Champion employee survey

Due to the nature of this campaign, should you care to use it, care must be taken with the list of employees that it is sent to. Careful filtering of the score thresholds is required to make sure that your champion employees are a suitably small subset of your organization. Limited customization of this campaign is required before sending. You may of course wish to change the list of hardware offered to recipients. The only mandatory modification necessary is to enter the name of the collaboration tool that you have used pack to monitor by replacing the entry <insert collaboration tool here>.

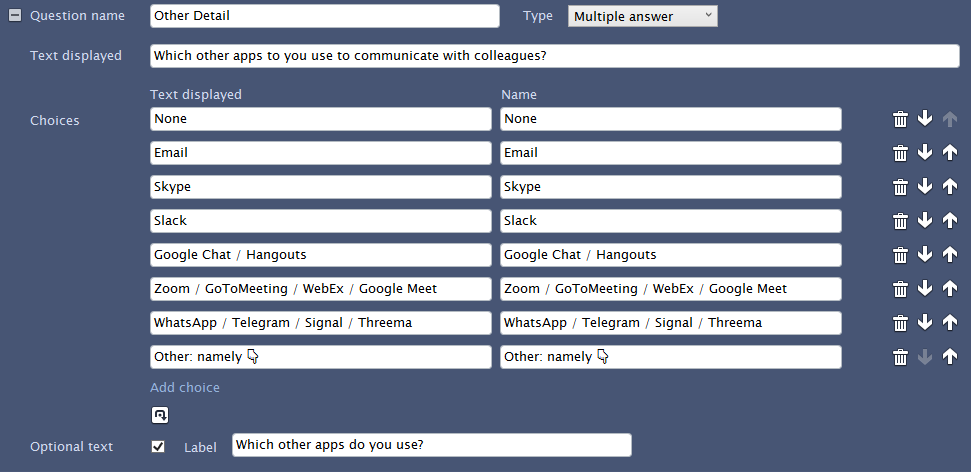

Non-engaged employee survey

The second campaign is designed to be targeted at the non-engaged employees in order to ascertain why it is that they do not make full use of the target collaboration tool provided.

The campaign will start by checking that the target application is considered to be their primary collaboration tool. Depending on the answer to this question, one of two similar questions with multiple responses will follow to help determine the cause of their non-engagement.

The final question asks whether respondents have a preference for a different collaboration tool, and if so, which one(s).

In terms of customization, as with the previous campaign, the name of the collaboration program you are tracking will need to be entered, in this case in three questions. Finally, you may wish to modify the list of suggested alternative collaborations in the final question:

Change Log

V1.0.0.0

Initial Release